Irreducible Complexity

Irreducible Complexity

The theory of natural selection proposes that small changes over millions of years can gradually produce increasing amounts of complexity through the gradual accumulation of beneficial mutations. The incremental accumulation of beneficial mutations gradually producing increasingly complex structures or pathways. Those mutations which are not beneficial will lessen the organism’s ability to survive and will be gradually bred out.

Richard Dawkins – By Matthias Asgeirsson from Iceland – Richard Dawkins on Flickr, CC BY-SA 2.0, Link

The gradual accumulation of increasingly complex functionality might produce some beneficial changes over long time frames – theoretically. The atheist Richard Dawkins has attempted to provide computer evidence that evolution could spontaneously produce new information in a closed system.

The ability to produce new information without outside interference (a “closed system”) is one of the biggest obstacles facing the origin of life scientists today. Biological systems are extremely complex requiring vast amounts of information and tight integration for the system to survive.

The Computer Did It!

Dawkins proposed in this book The Blind Watchmaker that such information could arise spontaneously through a sufficient number of generations. To illustrate this, he produced a computer program seeking to make an evolutionary outcome,

Nothing in my biologist’s intuition, nothing in my 20 years’ experience of programming computers, and nothing in my wildest dreams prepared me for what emerged on the screen…. With a wild surmise, I began to breed, generation after generation, from whichever child looked most like an insect. My incredulity grew in parallel with the evolving resemblance…. I still cannot conceal from you my feeling of exultation as I first watched these exquisite creatures emerging before my eyes. I distinctly heard the triumphal opening chords of Also sprach Zarathustra (the ‘2001 theme’) in my mind.

The problem with most computer programs producing systems with new information is they selectively support “good” mutations before they have a chance to give a survival advantage. A critique of Dawkin’s programs as well as multiple others over the intervening years can be found here and here.

However, there are some very complex cellular processes that could not be manufactured using that mechanism. Some mechanisms such as cellular machines have a featured termed “irreducible complexity.” This feature is present when any simpler mechanism for this machine would not just result in some loss of functionality but would destroy its functionality altogether.

Irreducible complexity places a significant and insurmountable obstacle in the production of more complex life such as the traditional evolutionary paradigm. This paradigm assumes all life arose from simpler life through the gradual accumulation of beneficial mutations eventually resulting in the evolution of man from bacteria.

In fact, since all life uses essentially the same biological code found in DNA, all life is purported to have arisen from a common ancestor eons ago.

However, recent evidence casts considerable doubt on this central evolutionary paradigm. One of these pieces of evidence is the inability to produce mechanisms that can not be simplified without loss of function.

Examples of Cellular Machines

These YouTube videos present animations giving some idea of cellular machines in action.

Common Descent and Natural Selection

All animals within a group of animals are thought to have a common descent from a common ancestor. For example, all dogs are thought to arise for a common ancestor – probably a wolf. All animals with a backbone (vertebrates) are thought to arise from a common ancestor, and all multicellular organisms are thought to arise from a single-celled organism.

This idea is known as universal common descent. This was the central idea proposed by Darwin; that all life “evolved” from a common ancestor. The descendants of that common ancestor billions of years ago gradually assumed increasing complexity due to genetic variability. While most of these genetic changes were catastrophic resulting in dead organisms, several were beneficial resulting in an increased chance for survival.

This increased chance for survival from beneficial genetic changes gave the organism a greater chance to produce offspring which also possessed these beneficial traits allowing them to gradually displace those organisms without these beneficial changes.

Over time, these beneficial changes accumulated resulting in gradually increasing complexity allowing a bacteria to become a man.

Microevolution and Macroevolution

It is important to distinguish between microevolution and macroevolution. Many people have observed microevolution in their environment. For example, the beaks of certain finch species may vary in size and shape, or birds without predators may lose their ability to fly. Microevolution is undeniable and it is often without the experience of the average observant person to notice these changes in their environment.

Macroevolution is another thing entirely. This process is thought to produce fundamentally different biological structures and new forms of life. It was thought that macroevolution would result if enough microevolution events were stacked up over time – the beak of a bird might become the mouth of a cat.

This idea was expressed by the Russian-American evolutionary biologist Theodosius Dobzhansky in his 1937 work Genetics and the Origins of Species. He noted,

We are compelled at the present level of knowledge reluctantly to put a sign of equality between the mechanisms of macro and microevolution.

Dobzhansky noted microevolution is a common experience of scientists; we can observe and construct experiments demonstrating macroevolution. Microbes that reproduce rapidly to an enormous population, there might be millions of spontaneous mutations resulting in several thousand beneficial ones. If a beneficial mutation results in an organism that is more likely to survive because of these mutations, then it is more likely that the organisms will survive and future generations will have a better chance for survival.

Dobzhansky admitted was unable to produce macroevolution defined as the production of new organisms above the species level such as the evolution of a dog to a cat or rabbit, for example. He settled for the demonstration of microevolution defined as

This might be observed as the change of beak size, insect color, or fruit fly eye color over time due to a shuffling of genes but no new genetic information. This would explain, for example, the different breeds of dogs, different strains of corn, or different races of humans. Each of these has roughly the same genetic information with the observed differences largely due to a shuffling of genes or gene frequencies.

Competing Explanations

Cytochrome Protein – By Klaus Hoffmeier – Own work, Public Domain, Link

No scientist in their lifetime has ever directly observed macroevolution defined as the production of new life above the species level. The science of biological origins naturally becomes a historical science where evidence is gleaned from observation of past events to explain current results.

There is a rich tradition in historical science as it is one of the most mature and well-developed disciplines with methods to evaluate the cause of current events. Historical sciences study clues in the present to determine the cause of past events. Sometimes there are multiple potential causes of past events which each seems to have an approximately equal probability of being correct. There will be well-respected scientists on each side of the great divide with no clear winner. If a decisive clue can be found that makes one potential cause of a past event more likely, then one explanation will replace the other.

This process is referred to as abductive reasoning – the best explanation is the simplest and most likely explanation of an event or process. Another understanding is that abductive reasoning is the inference to the best explanation. Unlike deductive reasoning, abductive reasoning does not try to produce a logical proof of a conclusion, only that the conclusion is more likely and has greater explanatory power than other conclusions.

This form of reasoning is most useful in situations where current evidence is unable to conclusively distinguish among multiple explanations.

As a side note, abductive reasoning is frequently used in medicine to arrive at a diagnosis. Generally, a physician will take into account all the evidence currently available, and derive a list of potential diagnoses called a “differential diagnosis.” The most likely explanation is at the top of the differential list and then appropriately acted upon. A common aphorism among physicians is that if it looks like a duck, walks like a duck, and quacks like a duck – it’s probably a duck.

Occam’s Razor

Another logical method for determining the most likely explanation for a historical event is something called Occam’s Razor. This idea is attributed to an English Franciscan friar William of Ockham (1287 – 1347) who was a scholastic philosopher and theologian who proposed the

Razor

the simplest explanation is usually the correct one. This principle is also known as the law of parsimony. Most importantly for our discussion, simpler reasons for a historical event are preferable because they can be more easily falsified.

The origin of life can be subjected to the philosophical principles of abductive reasoning and the law of parsimony. There are two competing explanations for the origin of life that are most commonly exploited today: natural evolution whereby humans arose through natural evolutionary processes from bacteria, and intelligent design where life did not arise by chance but rather through the intervention of a designer. The identity of this “designer” is not important for the Intelligent Design proponent.

The evolution of a bacteria into a human through eons of mindless evolution requires an extraordinarily complicated process – so complicated in fact that it remains a mystery. Origin of life scientists had great optimism they would be able to completely explain how life originated on Earth without intervention from a Designer. To date, however, their original optimism has now dissipated that an adequate natural explanation can ever be obtained.

The law of parsimony can be applied easily to the problem of abiogenesis.

- Natural evolution requires a huge number of steps across billions of years using an unknown process to produce vast complexity only through mindless incremental improvements,

- Intelligent Design suggests the creation of vast complexity requires intelligence; this is the common requirement of any complex information-laden system and provides much greater explanatory power than natural evolution.

Life Proteins and Irreducible Complexity

It is common knowledge that all life involves the intervention of proteins, especially enzymatic proteins. There are about 75,000 different enzymes in the human body, each of them in a three-dimensional folded pattern to produce an “active site” which provides the enzymatic activity.

The vast number of proteins that are produced in non-biological situations will not even fold into a three-dimensional structure, let alone produce anything like an active site. These proteins are then connected in a complicated molecular pathway where each protein requires the other for the entire operation to work.

A recent peer-reviewed journal article examined the probability of these pathways and metabolic machines coming together through chance assembly and concluded,

fine-tuning is a clear feature of biological systems. Indeed, fine-tuning is even more extreme in biological systems than in inorganic systems. It is detectable within the realm of scientific methodology. Biology is inherently more complicated than the large-scale universe and so fine-tuning is even more a feature.

Mousetrap

The “fine-tuning” refers to the exacting nature of the construction of each of the enzymatic proteins, and their assembly into an auto-regulated metabolic pathway to enable life. Additionally, life proteins can be assembled into machines resembling modern motors and appear to be carefully assembled by a designer to perform a specific function.

Many of these pathways and machines are made such that the subtraction of any subunit would destroy the function of the whole unit. Michael Behe proposed the model of a mousetrap to illustrate irreducible complexity. The mousetrap is a very simple contrivance compared to the fantastic complexity of life, but it illustrates the concept quite well. For the mousetrap to perform its design function of catching a mouse, all the parts must be in place and must function properly. None of the pieces can be missing or placed incorrectly for the mousetrap to perform its function.

The mousetrap cannot be made one piece at a time through millennia of evolution but must instead be

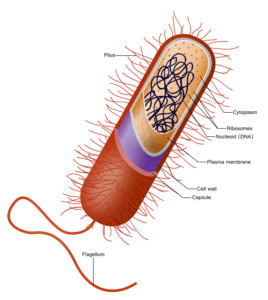

Typical bacteria – By This vector image is completely made by Ali Zifan – Own work; used information from Biology 10e Textbook (chapter 4, Pg: 63) by Peter Raven, Kenneth Mason, Jonathan Losos, Susan Singer · McGraw-Hill Education., CC BY-SA 4.0, Link

constructed all at once. Pieces of a mousetrap will not provide an effective device. Additionally, all of these individual pieces must be put together in the proper way for the trap to work; otherwise, the metabolic cost of making a mousetrap would be wasted.

Similarly, a bacterial flagellum must be put together all at once and not through prolonged millennia of slow, unguided evolution as it could not function unless it was entirely assembled the proper way.

This article demonstrates the fantastic complexity of the flagellum – which in itself represents only a small portion of the bacteria. All the pieces for the flagellum have to be individually made, and then properly put together for the little flagellum to work. The problem is that the flagellum will not work properly unless put together the right way with the right building blocks.

The key understanding is that the bacterial flagellum could not be made by successive small mutations or modifications because the whole structure is required for the flagellum to work.

Darwin, the Flagellum, and Irreducible Complexity

Darwin recognized the importance of this concept in his original work in the Origin of Species. He noted,

Natural selection acts only by taking advantage of slight successive variations; she can never take a great and sudden leap, but must advance by short and sure, through slow steps …

If it could be demonstrated that any complex organ existed which could not possibly have been formed by numerous, successive slight modifications, my theory would absolutely break down.

There has never been any process proposed whereby the bacterial flagellum could be produced through the gradual accumulation of small changes. This lack of progress satisfies Darwin’s definition for the “break down” of his theory.

The best explanation through abductive reasoning and Occam’s Razor is that the bacterial flagellum, and all the hugely complicated processes which occur during cellular function, were much more likely purposefully designed rather than arise through the blind accumulation of small changes.

Summary

One of the most fascinating discoveries of the past few years has been the fantastic complexity of the living cell. Part of this complexity is the existence of actual cellular machines including the bacterial flagellum, machines to make proteins through a code provided by messenger RNA transmitting instructions from DNA, contraction of muscle fibers, just to name a few.

The important point is that these machines are exquisitely complex involving the interactions of many specialized proteins. All of these specialized proteins are required to be present and working properly; put another way, all the proteins are required to be present and working correctly.

The cellular machines demonstrate irreducible complexity. They could not be made by small progressive changes over time because any simpler machines do not work. There is no pathway from a simpler machine to a complex one because the simpler machine could not be functional.

Furthermore, mutations that might be beneficial in one respect usually involve the loss of information and degradation of cellular functioning leading to eventual cellular death. This conclusion is supported by Lenski’s work which is evaluated in another blog post.